When ZoomInfo implemented its first microservice (an Apache Solr search service) we selected Kubernetes for container orchestration expecting it to adequately manage creating and destroying new instances and directing traffic between them. Unfortunately, we found some issues with the way it handled load balancing and looked for another solution to properly manage traffic to and from our microservice instances. Istio is that solution.

What Is Istio?

Istio bills itself as a service mesh that transparently layers on top of an existing distributed system to connect, secure, control, and observe services. It can provide features like service discovery, failure recovery, authentication, rate limiting, and A/B and canary testing in addition to the load balancing functionality ZoomInfo needs.

How Istio Works

Istio consists of three layers: a data plane, a control plane, and mixers that facilitate communication between the two as illustrated in this diagram from the Istio website.

The data plane provides sidecar proxy servers (extended from Envoy proxies) to each microservice instance, controlling traffic to and from each. The control plane manages and configures these proxy servers according to defined rules, and the mixers actually route traffic according to the directions from the control plane.

More information on Istio, its architecture, and the basic services it can provide is available here.

How Istio Solved Our Load Balancing Issues

Before outlining how Istio fixes the problems we saw with Kubernetes load balancing it’s important to understand what those problems were.

The Problem with Kubernetes Load Balancing

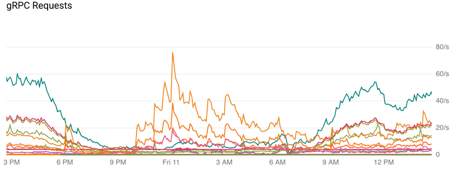

At first it appeared that Kubernetes was doing a fine job with our load balancing. However, over time, we observed that traffic to certain pods would spike then wane just as traffic to others spiked.

If you look at the graph of requests processed by each Kubernetes pod above, you’ll see this behavior. The amount of traffic processed by each node varies greatly at any given time and the specific pods experiencing heavy, medium, or light traffic change over time. This is not only inefficient but also risky; it means that if a single instance goes down it’s much more likely to take a significant portion of our traffic with it.

It turns out that Kubernetes uses an L4 load balancer, meaning that, for HTTP/2 traffic in particular, it assigns traffic on a per connection basis and all requests via a single connection are then sent to the same pod it was originally assigned. Since our microservice is using remote procedure calls that use HTTP/2, a single consumer uses a single connection per session whether that session includes one, a dozen, a hundred, or thousands of individual data requests. Those spikes we saw were sessions with a lot of requests at that specific time. As requests dropped off or sessions closed, the traffic to those same pods drop. A few seconds later a different session with lots of requests might be assigned to a different pod, causing it to spike instead.

The Fix Using Istio

As discussed above, Istio uses a three layer approach that includes a centralized service applying any rules for traffic routing, a centralized service actually managing traffic according to those rules, and individual sidecar proxies within each microservice instance.

This system provides an L7 load balancer, meaning that it assigns traffic on a per request basis, even for HTTP/2 traffic. If a single connection generates 100 requests, those requests are split up among the available instances for faster processing and to ensure even request traffic to each instance.

This model also allows routing based on criteria of the requests, allowing certain requests to be routed to test servers or to servers trying out newer functionality on a limited subset of users (for example, A/B testing).

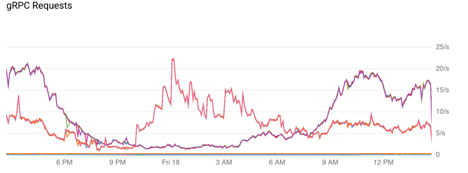

After adopting Istio load balancing, ZoomInfo immediately saw its production traffic even out as shown in the graph below:

Notice that there are two lines that fluctuate over time in this graph. Those lines actually represent all traffic doing people searches and all traffic doing company searches and those lines consist of multiple individual lines directly on top of each other, each representing individual microservice instances. The traffic is so evenly distributed that there is no delta between them at any meaningful scale.

Downsides of Using Istio

While Istio has a lot of offer, it does have some drawbacks. The biggest is that it’s very new. This comes with the risk of more rapid future change than products that have already built up large user bases that typically lead to small, incremental changes to a product. It also means that it hasn’t been very widely adopted yet and there aren’t very many experts out there. With more popular tools that have been around for a long time, it’s often easy to get answers to questions just through a quick online search or by asking a question at Stack Overflow or similar community support sites. With Istio, figuring out solutions to issues and challenges that cropped up during the development process proved more difficult. ZoomInfo was on the leading edge of the technology and thus there wasn’t much general knowledge out in the wider world.

Because ZoomInfo uses a beta version included inside our cloud provider’s version of Kubernetes, we were able to leverage our cloud support contract to get help and advice on implementing Istio. If you have a support option of this sort, we recommend taking advantage of it if you plan to try Istio or any other new cloud tools or technology.

Final Thoughts on Istio and Load Balancing Microservices

While there is some risk involved in using such a new product and it would be nice to have more pervasive support options, ZoomInfo has been very happy with its choice to use Istio for load balancing its search microservice. It solved the problems we experienced with Kubernetes, resulting in traffic distributed so evenly it appears as a single line on graphs.

We definitely recommend using Istio as part of your microservice tool chain.