Creating a custom disable mechanism for GCS

By ZoomInfo Engineering, Matt Castanien, June 28, 2024

Intro

With the breakneck pace of cloud computing, a great deal of focus is put into improving scalability and speed on creation of new resources and scaling infrastructure to meet growing demands. However, equally as crucial is the often-overlooked process of decommissioning resources. Proper decommissioning ensures not only the security and cost-efficiency of your cloud environment but also promotes best practices in resource management.

The risks of decommissioning should be obvious; depending on various factors, such as the quality of documentation and the experience level of staff, decommissions can go wrong quickly, and cause instability and critical incidents. Even as an experienced DevOps Engineer, I’ve caused outages in my efforts to clean up infrastructure. While stability and reliability are critical to any business, you cannot ignore and skip decommissions. There must be a middle ground to mitigate risk as much as possible.

At ZoomInfo, one of the mechanisms we use to mitigate risk with decommissions is to always try to disable a resource prior to deleting. This allows us to have the ability to quickly re-enable the resource in the event there was something missed in the decommission process and it causes an outage. Going straight to deletion could lead to an extended outage and customer impact. Additionally, handling more complex tasks like copying the data somewhere else, and then deleting afterwards takes up considerable time, and could lead to orphaned backups, which are an issue in and of themselves.

While many resources, and services, provide this natively, GCP’s Cloud Storage (as of this writing) does not. After a serious outage where accidental deletion of a bucket caused an outage, I began looking for creative ways to help mitigate this risk without hindering the decommission process of buckets.

Options for ‘disabling’ buckets

The first option would be to remove IAM perms from the bucket prior to deleting. This has a several disadvantages. First, you would need different steps depending on if it is manually or IaC managed, increasing the quantity of your decommission steps. Second, if manually managed, you would need to capture all of the IAM bindings up front and have them available in the even you had to revert. This introduces human error at several points. Third, depending on how you are managing IAM in IaC, you may have several, or even dozens, of code changes required for removing the IAM permissions. If more than a few, all those need to go through PR review process, as well as revert would take time as well.

The second option I learned about while researching Resource Tags for Organization Policy Conditions. Resource Tags can be bound to most asset types, including GCS Buckets, and IAM Deny Policies can utilize Tags in their conditionals.

Resource Tags are unique resources themselves, and not part of the metadata of resources. This is important because we extensively use Infrastructure-as-Code (IaC) at ZoomInfo, and if the change caused drift with our IaC, utilization of Tags would be an issue for this use-case. I validated this and confirmed that binding a Tag to a bucket and running the Terraform plan for it didn’t cause drift. This has an additional benefit by allowing us to have a single solution across both IaC and manually managed infrastructure.

IAM Deny Policies are evaluated prior to Allows, handling even those with escalated role bindings, like ‘Storage Admin’ and even ‘Owner’ at the project-level (Reference: GCP IAM Policy Evaluation).With all of this combined, I made the decision this was going to be the setup.

The Setup

The Tag

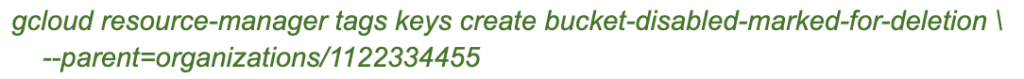

The Tag needs to be created before it can be used in the IAM Deny Policy. Tags can be created at the Organization level or project level. Since we want to leverage this anywhere within the GCP Organization ecosystem, we are going with Organization-level Tag.

Please note that the Tag key and Tag value are unique resources. They are created separately, and once a Tag key has a Tag value, the key cannot be deleted. Additionally, a Tag value cannot be deleted if it is attached to a resource.

I am a stickler for resource naming conventions. Poor naming conventions are like booby traps that will ensnare future staff. Good naming conventions often go unnoticed because they are flexible and provide context well into the future. ( I could write an entirely separate blog post just on Systems Thinking around Naming Conventions.) For naming this Tag, I consulted several internal staff members who also care about naming conventions, and we settled on:

bucket-disabled-marked-for-deletion

The Tag key name is contextually rich. If you saw this bound to a bucket anywhere across the GCP Organization ecosystem you would understand its purpose.

You can create the Tag via the Console, or via gcloud CLI. Steps for this can be found here.

With the tag now created at the organization level, it was time to create the value. In this particular use-case, the Tag will be in the conditional for the IAM Deny Policy. Since we will not touch every bucket before implementation, the only appropriate string value is true.

The IAM Deny Policy

GCP has done an amazing job ramping up permissions that support IAM Deny Policies. I made a request to our TAMs for the addition of permissions to support IAM Deny Policies. Within a week, the first of several permissions not previously supporting IAM Deny Policies were added. Within a month, all of the permissions I had requested to support IAM Deny Policies were now supported.

This section breaks out each section of the IAM Deny Policy to explain each piece.

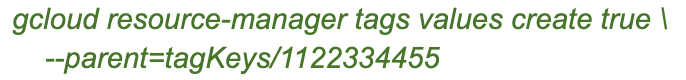

The IAM Deny Policy – deniedPermissions

This was relatively easy, and the GCP documentation clearly lays out the permissions that support IAM Deny Policies(GCP Deny Permissions Support).

I collected all the permissions used to read & write objects in a bucket, in addition to the permissions for Tag binding removal. Here is the list that is used on the IAM Deny Policy as of this writing.

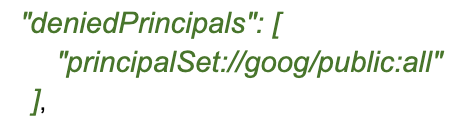

The IAM Deny Policy – deniedPrincipals

Since the idea here is to ‘disable’ a bucket, we need to deny everyone, except a tiny sliver of folks, like myself, who are handling the decommissioning of resources.

This document is important, and you should bookmark it to see the formatting of this field(GCP Principal Identifiers).

The IAM Deny Policy – deniedExceptions

In collaboration with our Google Workspace team, we created a Google Group, with a contextually rich name, and a small group of DevOps Engineers were added. This group will still be able to see and access the bucket during the disable stage.

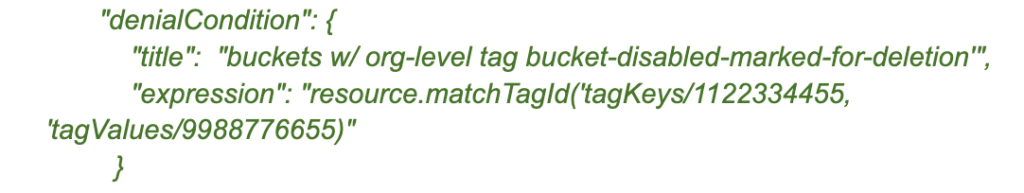

The IAM Deny Policy – denialCondition

This was the trickiest part to get right. IAM Deny Policies support a few different match options. I initially tested ‘matchTag’, but ultimately ended up going with ‘matchTagId’.

(Reference: GCP IAM Conditions Attribute Reference – Resource Tags )

Implementation

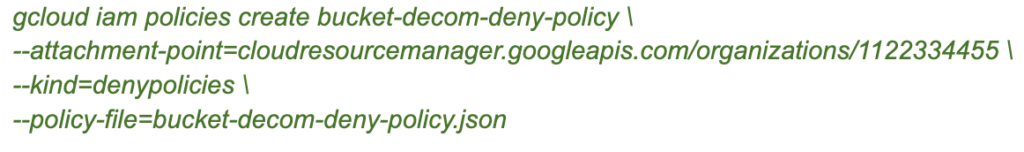

After extensive testing in a dedicated GCP organization, the day came to add the IAM Deny Policy. At the time of creation, this was done manually. The commands provided will require escalated permissions. Google made the right decision to -not- add IAM Deny Policy permissions into the primitive role ‘Owner’. You will need the predefined role ‘Deny Admin’ at the cloud hierarchy level you are adding the Deny Policy. In our case, it is at the Organization level.

First up, get the Organization ID:

gcloud organizations list

Let’s create the policy:

Let’s list the policy to make sure it’s there:

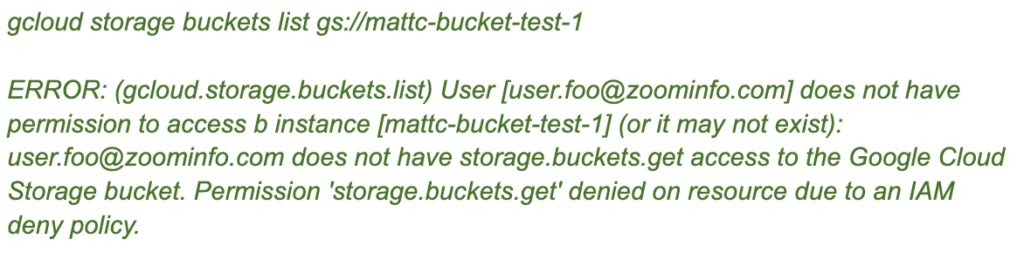

And we test

A few things to point out:

- The end of the message explicitly states the permission denied is from an IAM Deny Policy. This is very helpful because if someone, or a service, does encounter it, it won’t be mixed up with a normal permission error.

- Gcloud currently has a bug where it outputs some additional, unrelated text, ‘b instance’. We have notified GCP of this issue, but it still isn’t fixed.

- As of this writing, the Cloud Audit log does not contain the authenticated user, nor a unique status error code that the permission denied is from an IAM Deny Policy. So the log will not provide that level of granularity.

The below diagram shows three different principal scenarios attempting to access a bucket with the Tag attached.

Final Thoughts

Rolling this ‘feature’ out now allows us the ability to have a step prior to deletion that can be easily reverted if something is found to still be using a bucket, even after analysis for the decommission. We plan to expand this ‘disable’ feature using Tags and IAM Deny Policy for BigQuery as well to reduce risk on dataset and table decommissions.

Staying consistent and vigilant with decommissions is challenging. Each cloud service or resource type has its own challenges, making iterative improvements crucial. In our case, pushback on decommissions due to outages from human errors spurred me to try and find additional ways to reduce risk to get the reliability & stability champions back on my side.

If your company has little to no decommission processes, don’t get discouraged. Start small. Start today. Create a document to collect steps and processes as you address each resource and service one at a time. Each time try to iterate and improve it. Have someone on your team take the steps, and walk through it on a non-production resource and have them provide feedback. Over time, you will end up having a robust set of manual processes. This is when you can start to look at automating portions of the process.

You also should leverage the cloud provider’s capabilities to iteratively improve your processes, which will ultimately help with the automation piece down the road. For example, GCP’s audit logs for services like Secrets Manager & KMS allow you to easily see if a KMS key or Secret version is used. And these can be searched with gcloud ‘logging’ sub-component. (Reference: ‘gcloud logging read’) Use these cloud-native tools to help iteratively reduce risk as part of each service decommission process. If the cloud provider doesn’t provide something you need, or you have an idea or feature, reach out to your TAM/CSM, or if you are a small shop, open a ticket with the Cloud Provider and/or open a feature request. Be vigilant.

Internally, do not take no for an answer when discussing decommissions with leadership. Help them understand the criticality of keeping the ecosystem clean and tidy. For example, getting rid of unused buckets can save money and reduce data compliance & security risks. Getting rid of unused service accounts and keys reduces attack vectors. Also, leverage your Security, Legal, and Compliance teams to help drive decommissions. If there are no Policies in place that help enforce decommissions, help them formulate and publish them.

It’s important to also keep track of what you decommission, and use those KPIs/Metrics for you, or your team. If you are using Jira, or equivalent, create a unique label for all Decommission tickets so you can track and count them. Same goes for outages. Having an outage-to-change ratio for decommissions can help track improvements over time, and help reduce the perception that Decommissions are risky.